The music and video information are processed through a multimodal architecture with audio–video information exchange and boosting method. We also produced a labelled dataset and compared the supervised and unsupervised methods for emotion classification. In this study, we proposed an unsupervised method for music video emotion analysis using music video contents on the Internet.

This can be one reason why there has been a limited study in this domain and no standard dataset has been produced before now.

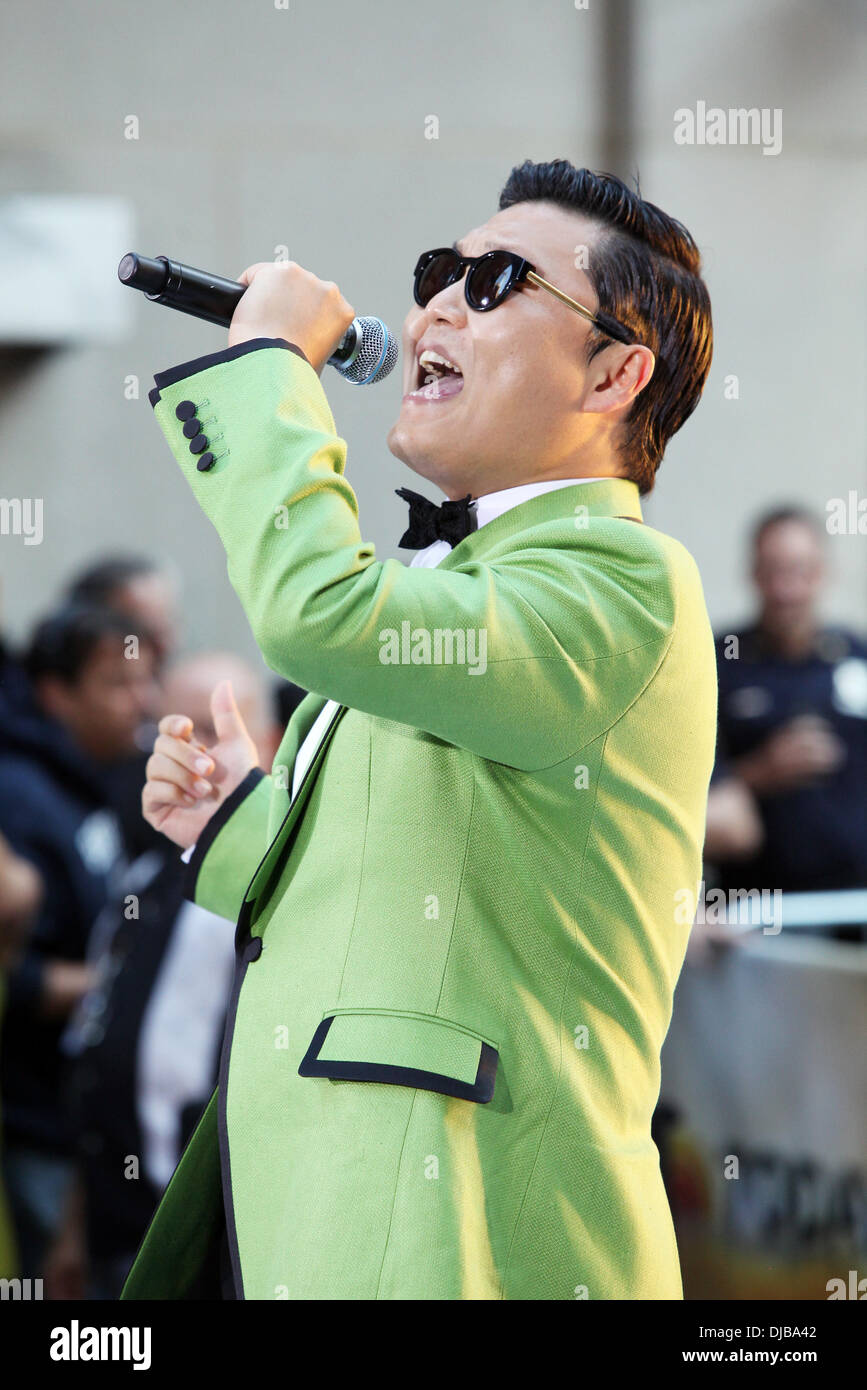

The music video emotion is complex due to the diverse textual, acoustic, and visual information which can take the form of lyrics, singer voice, sounds from the different instruments, and visual representations. Affective computing has suffered by the precise annotation because the emotions are highly subjective and vague.

0 kommentar(er)

0 kommentar(er)